“Whatever happened with the ozone layer panic, if scientists are so smart?”

We listened to the scientists, and the problem went away.

Didn’t go away, just stopped getting worse at an alarming rate.

Didn’t the hole above Australia close again?

As a kiwi, the amount of sunburn I get every summer would imply it hasn’t.

Yeah but I’m pretty sure that’s just cause the sun is upside down over there or something.

Down there??? The Earth isn’t flat, you say??

The picture on your wall is also flat, still it has up here and down there

Of course it isn’t flat, haven’t you seen a mountain?

I think they meant down there on the map anyway.

I thought it was Australia that’s upside down, and New Zealand doesn’t actually exist?

No, also the massive SO2 that Mt Pinatubo put into the atmosphere slowly went away. And the CFCs.

Pinatubo created more sulfur emissions during its eruption than 10 years of all human coal burning.

And also on top of that we were also wrecking the Ozone.

Nature can always make our mistakes much much worse.

It’s the same as people using the example of the Y2K bug being a non event. Yeah, because globally trillions of dollars were spent fixing it before it became an event.

thanks for the tldr

No

Get that marble brain Reddit-style bs outta here. If you wanna deny, you’re gonna have to come up with a reason that you could be right. Otherwise, we’re just gonna point al laugh at your dumbassery.

Removed by mod

Did you even bother to read it?

Among other things it says: “Based on the Montreal Protocol and the decrease of anthropogenic ozone-depleting substances, scientists currently predict that the global ozone layer will reach its normal state again by around 2050.”

The reason it isn’t discussed as much is because it’s on the mend and the only things newsworthy are the larger than normal cyclical hole that forms. Another thing mentioned was a volcanic eruption in 2022 that is believed to contribute to that “larger than normal” hole.

Nothing there disputes the fact that we took action. I worked as a refrigeration tech and we even had to learn about this before we could be EPA certified to handle refrigerants.

Yes. Thank you, cheese stick.

Similar with Y2K — it was only a nothingburger because it was taken seriously, and funded well. But the narrative is sometimes, “yeah lol it was a dud.”

All this hysteria over nuclear weapons is overblown. We’ve known how to build them for 75 years yet there hasn’t been a single one detonated on inhabited American soil. They’re harmless

You even dropped a few accidentally and nothing happened! Complete duds these things really

Yeah but not all people live on American soil…

It’s the American tradition to ignore that

WTF?

Unless that was sarcasm that I missed… 100’s of weapons have been tested on US soil…

inhabited

Not sure what you mean.

The US was inhabited last I checked.

Pretty sure no human lived at the Trinity test site or anywhere else in the test sites where weapons were detonated, especially at the moment of detonation. And I’m pretty sure none have since moved onto those sites either. Hence “inhabited”. It’s not like we nuked cities and towns.

Right… Gotcha. So you’re a ‘change the goalposts to keep making me right as the argument and evidence changes’ kinda person.

No point engaging with your type.

Sounds like you’re the goalposts-mover here, shipmate, and it seems the rest of the readers here agree with me. Maybe this place ain’t your venue.

deleted by creator

The question is, what will happen in 2038 when y2k happens again due to an integer overflow? People are already sounding the alarm but who knows if people will fix all of the systems before it hits.

It’s already been addressed in Linux - not sure about other OSes. They doubled the size of time data so now you can keep using it until after the heat death of the universe. If you’re around then.

Finally it’d be the year of desktop linux with all the windows users die off

This is the funniest comment I have ever read here. Thank you.

debian for example is atm at work recompiling everything vom 32bit to 64bit timestamps (thanks to open source this is no problem) donno what happens to propriarary legacy software

I think everything works in windows but the old windows media player. You can test it by setting the time in a windows VM to 2039.

Obviously new systems are unaffected, the question is how many industrial controllers checking oil pipeline flow levels or whatever were installed before the fix and never updated.

Being somewhat adjacent to that with my work, there is a good chance anything in a critical area (hopefully fields like utilities, petroleum, areas with enough energy to cause harm) have decently hardened or updated equipment where it either isn’t an issue, will stop reporting tread data correctly, or roll over to date “0” which depending on the platform with industrial equipment tends to be 1970 in my personal experience. That said, there is always the case that it will not be handled correctly and either run away or stop entirely.

As a future boltzmann brain, I agree.

2038 is approaching super fast and nobody seems to care yet

At the rate of one year per year, even.

For each second that passes we’re one second closer to 2038

Except for leap seconds. Time is the worst to work with :(

omg

deleted by creator

AfaIk that’s not entirely true, e.g. Debian is changing the system time from 32 bit integer to 64 bit. Thus I assume other distros do this as well. However, this does not help for industrial or IOT devices running deprecated Unix / Linux derivatives.

industrial or IOT devices running deprecated Unix / Linux derivatives

This is my concern, all the embedded devices happily running in underground systems like pipes and cables. I assume there are at least a few which nobody even considered patching because they’ve “just worked” for decades!

Or like… PLANES! Some planes still update their firmware using floppy disks

They do at least get updates though, and they’re big enough that they don’t get forgotten!

That’s not true, lots of people are panicking about how fast they’re getting older

Well that’s justifiable. We’re not sure if we’re even going to make it to then

I can’t remember the name but I think this is some kind of paradox.

Like the preventative measures we’re so effective that they created a perception that there was no risk in the first place.

It’s called the prevention paradox: It’s when an issue is so severe that it is prevented with proactive action, so no real consequenses are felt so people think it wasn’t severe in the first place.

Case in point: Measles. It was a thing when I was a kid. Then it wasn’t. Now my kids have to deal with Measles because we can’t teach scientific literacy.

that waste of effort cold war… /s

I wasn’t working in the IT field back then, as I was only 16, but as I knew that it’d most likely be my field one day (yup, I was right), I followed this closely due to interest, and applied patches accordingly.

Everything kept working fine except this one modem I had.

I kinda wish I knew what it was like working on Y2K stuff. It sounds like the most mundane bug to fix, but the problem is that it was everywhere. Which I imagine made it pretty expensive 👀

That’s a pretty good description. And most software back then didn’t use nice date utilities, they each had their own inline implementation. So sometimes you had to figure out what they were trying to do in the original code, which was usually written by someone who’s not there anymore. But other times it was the most mundane doing the same fix you already did in 200 other programs.

And computer networking, especially the ability to remote into a system and make changes or deliver updates en masse, was nowhere near as robust as it is today meaning a lot of those fixes were done manually.

And that modem was handling the nuke codes, right?

Most of the y2k problem was custom software, and really old embedded stuff. In my case, all our systems were fine at the OS, and I don’t remember any commercial software we had trouble with, but we had a lot of custom software with problems, as did our partners

“Lol Elon rocket go boom, science isn’t real” is also happening

Stupid people just think they’re the smartest ones in the room now

Elon musk isn’t a scientist, he’s a scammer who got lucky. That, and an asshole.

Well considering Elon situation I wouldn’t blame anyone for making fun of his idiotic ventures. Also starship is actually dumb and saying “you expected for it to blow up” is something no real scientist would’ve said unless they were making a bomb.

How is Starship dumb exactly? Making a new thing at any extreme of our current capability is going to be hard and its not unexpected when something goes wrong. What would be dumb is if they put human lives on the line

It had no payload on any of its flights. Rockets that have enough time/money put into development to have a reasonable expectation of working on the first try (and don’t have such an ambitious design) normally launch with a payload on their first flight. Sometimes, even those fail on the first few flights. Having the first few of a new rocket design fail before reliability is achieved is common (ex: Astra) and SpaceX’s other rocket, the Falcon 9, is known as the most reliable rocket, I even suspect it achieves landings more often lately than most others do launches.

Starship’s last launch went decently well, reaching orbit (which is as far as most rockets go!) but failing during reentry. It is also supposed to be the rocket with the largest payload capacity to low earth orbit, with 100-150 tons when reused and likely 200-300 when expended.

Starship, as it is right now, is already a better rocket than SLS. It can already carry more mass and be cheaper (even fully expended) than the SLS’s 4 billion cost per launch.

It will get better. Falcon 9 didn’t land the first time either, but now it has successfully landed more consecutive times than any other rocket has flown.

There’s nothing wrong with saying this is a test. This is only a test, and we don’t expect it to be perfect yet. Each time they learn from the data. And SpaceX hasn’t repeated the same mistake twice.

Y2K specifically makes no sense though. Any reasonable way of storing a year would use a binary integer of some length (especially when you want to use as little memory as possible). The same goes for manipulations; they are faster, more memory efficient, and easier to implement in binary. With an 8-bit signed integer counting from 1900, the concerning overflows would occur in 2028, not 2000. A base 10 representation would require at least 8 bits to store a two digit number anyway. There is no advantage to a base 10 representation, and there never has been. For Y2K to have been anything more significant than a text formatting issue, a whole lot of programmers would have had to go out of their way to be really, really bad at their jobs. Also, usage of dates beyond 2000 would have increased gradually for decades leading up to it, so the idea it would be any sort of sudden catastrophe is absurd.

The issue wasn’t using the dates. The issue was the computer believing it was now on those dates.

I’m going to assume you aren’t old enough to remember, but the “only two digits to represent the year” issue predates computers. Lots of paper forms just gave two digits. And a lot of early computer work was just digitising paper forms.

I remember paper forms having “19__” in the year field. Good times

When I was little I was scared of that problem and always put all four digits of the year in my homework.

You’re thinking of the problem with modern solutions in mind. Y2K originates from punch cards where everything was stored in characters. To save space only the last 2 digits of the year because back then you didn’t need to store the 19 of year 19xx. The technique of storing data stayed the same for a long time despite technology advancing beyond punch cards. The assumption that it’s always 19xx caused the Y2K bug because once it overflows to 00 the system doesn’t know if it’s 1900 or 2000.

With an 8-bit signed integer counting from 1900…

Some of the computers in question predate standardizing on 8 bits to the byte. You’ve got a whole post here of bad assumptions about how things worked.

a whole lot of programmers would have had to go out of their way to be really, really bad at their jobs.

You don’t spend much time around them, do you?

You do realize that “counting from 1900” meant storing only the last two digits and just hardcoding the programs to print"19" in front of it in those days? At best, an overflow would lead to 19100, 1910 or 1900, depending on the print routines.

Oh boy you heavily underestimate the amount and level of bad decision in legacy protokoll. Read up in the toppic. the Date was for a loong time stored as 6 decimal numbers.

And then there is PIC 99 in Cobol. In modern languages, it makes no sense, but there is still a lot of really old code around and not everything is twos complement, especially if you do not need the efficiency in memory and calculations.

Look some info on BCD or EBCDIC.

When you do things right, people won’t be sure you’ve done anything at all.

Y2K is similar. Most people will remember not much happening at all. Lots of people worked hard to solve the problem and prevent disaster.

Was there ever really a threat to begin with? The whole thing sounds like Jewish space lasers to me.

Edit: Gotta love getting downvoted for asking a question.

Yes. A massive amount of work went in to making sure the transition wnet smooth.

Yes, most administrative programs, think hospitals, municipal, etc had a year set only in 2 digits. Yesterdays timestamp will read as 99 years in the future, since the year is 00. Imagine every todo item of the last 20 odd years suddenly being pushed onto your todo list. Timers set to take place every x time can’t check when last something happend. Time critical nuclear safety mechanisms, computers getting stuck due to data overload, everything needed to be looked at to determine risk.

So you take all the dates, add size to store additional data, add 1900 to the years and you are set. In principle a very straight forward fix, but it takes time to properly implement. Because everyone was made aware of the potential issue IT professionals could more easily lobby for the time and funds to make the necessary changes before things went awry.

That’s fuckin wild and seems like a massive oversight.

Did they just not expect us all to live that long or did they just not think of it at all?

Yeah I would imagine poor/lazy planning or they either thought their tools would be replaced by then and/or that computers were just a fad so there’s no way they’d be used in the year 2000.

Depends on the “they”…

But generally, back in the day data storage, memory and processing power were expensive. Multiple factors more expensive than they are now. Storing a year with two digits instead of four was a saving worth making. Over time, some people just kept doing what they had been doing. Some people just learned from mentors to do it that way, and kept doing it.

It was somewhat expected that systems would improve and over time that saving wouldn’t be needed. Which was true. By the year 2000 “modern” systems didn’t need to make that saving. But there was a lot of old code and systems that were still running just fine, that hadn’t been updated to modern code/hardware. it became a bit of a rush job at the end to make the same upgrade.

There is a similar issue coming up in the year 2038. A lot of computing platforms store dates as the number of seconds since the beginning of 1970-01-01 UTC. As I type this comment there have been 1,710,757,161 seconds since that date. It’s a simple way to store time/date in a way that can be converted back to a human readable format quite easily. I’ve written a lot of code which does exactly this. I’ve also written lot of code and data storage systems that store this number as a 32bit integer. Without drilling down into what that means, the limit of that data storage type will be a count of 4,294,967,296. That means at 2038-01-19 03:14:07 UTC, some of my old code will break, because it wont be able to properly store the dates.

I no longer work for that employer, I no longer maintain that code. Back when I wrote that code, a 32bit integer made sense. If I wrote new code now, I would use a different data type that would last longer. If my old code is still in use then someone is going to have to update it. Because of the way business, software and humans work. I don’t expect anyone will patch that code until sometime around the year 2037.

Without drilling down into what that means, the limit of that data storage type will be a count of 4,294,967,296.

A little nitpick: the count at that time will be 2,147,483,647. time_t is usually a signed integer.

I often wonder what happened to the code I wrote in 2010 and used for production coordination & was working fine when I retired (2018). I figured the minute I left the hotshot kids would want to upgrade to their own styles. Not everyone liked it bc it wasn’t beautiful but no one could say it wasn’t functional, so it persisted. I was busy learning design and assemble CNC routers; but it worked and I didn’t have time to make a selection of backgrounds & banners. It’s just Excel, AutoCAD, & Access using VBA, which everyone has says they are going to deprecate VBA but, alas, people still want it. I remember Autodesk announcing the deprecation of VBA c. 2012 and I just looked and I guess they changed their mind bc there are modules for VBA available

14 years ago at stackoverflow. What is the future of VBA? https://stackoverflow.com/questions/1112491/what-is-the-future-of-vba Download the Microsoft VBA Module for AutoCAD - Autodesk https://www.autodesk.com/support/technical/article/caas/tsarticles/ts/3kxk0RyvfWTfSfAIrcmsLQ.html Links to download for VBA modules for their products Feb 7, 2024 To install the Microsoft Visual Basic for Applications Module (VBA) for Autocad, do the following: Select the appropriate download from the list below. Close all programs. In Windows Explorer, double-click the downloaded self-extracting EXE file.

sometimes legacy methods last longer bc no one wants to be a hotshot.

you think that’s bad, just wait til 2038 when the UNIX time rolls over.

The Mayans figured a calendar that only went to 2012 would be good enough. And they were right, their civilization didn’t exist anymore in 2012. Only relevance their calendar system had in 2012 was that some people felt like it was a prophecy about the end of the world. Nope, just was an arbitrary date the Mayans rightly assumed would be far enough away it wouldn’t matter.

While I suppose you could make a date format that was infinitely expandable, it would take more processing power and is really unnecessary.

Anyway got until 2038 until we’ll have to deal with a popular date format running out of bits. We’ll probably be in some kind of mad max post apocalyptic world before then so it won’t matter.

That’s a misconception. The Maya (not Mayan, that’s the language) long count for December 20, 2012 was 12.19.19.17.19. December 21, 2012 was 13.0.0.0.0. Today is 13.0.11.7.4. It continues the same way indefinitely, it’s just the number of days since some arbitrary date (August 11, 3114 BCE if you’re curious) in base 20, with the second to last digit in base 18, which seems odd at first but it rather cleverly makes it so the third digit can stand in as a rough approximation of years, and the second is approximately a generation. Now October 13, 4772 could be seen as an endpoint but there’s nothing that says it can’t be extended with one more digit to 1.0.0.0.0.0, and then you’re good for another 150,000 years or so.

Now there was a creation myth that said 0.0.0.0.0 was the previous world’s 13.0.0.0.0, but there was no recorded belief that this was any sort of recurring cycle, in fact plenty of Maya texts predicted astronomical events millennia past 2012. The idea that it was recurring was probably borrowed from the similar Greek construct of ekpyrosis, which doesn’t specify any sort of time frame.

You’re saying “imagine” a lot there.

Were there concrete examples of critical software that actually would’ve failed? At the time I remember there was one consultant that was on the news constantly saying everything from elevators to microwaves would fail on Y2K. Of course this was creating a lot of business for his company.

When you think about it storing a date with 6 bytes would take more space than using Unix time which would give both time and date in four bytes. Y2K38 is the real problem. Y2K was a problem with software written by poor devs that were trying to save disk space by actually using more disk space than needed.

And sure a lot of of software needed to be tested to be sure someone didn’t do something stupid. But a lot of it was indeed an exaggeration. You have to reset the time on your microwave after a power outage but not the date, common sense tells you your microwave doesn’t care about the year. And when a reporter actually followed up with the elevator companies, it was the same deal. Most software simply doesn’t just fail when it’s run in an unexpected year.

If someone wrote a time critical safety mechanism for a nuclear reactor that involved parsing a janky homebrew time format from a string then there’s some serious problems in that software way beyond Y2K.

The instances of the Y2K bug I saw in the wild, the software still worked, it just displayed the date wrong.

Y2K38 is the real scary problem because people that don’t understand binary numbers don’t understand it at all. And even a lot of people in the technology field think it’s not a problem because “computers are 64 bit now.” Don’t matter how many bits the processor has, it’s only the size that’s compiled and stored that counts. And unlike some janky parsed string format, unix time is a format I could see systems at power plants actually using.

Some of the software at my employer at the time, would have failed. In particular, I fixed some currency trading software

When you think about it storing a date with 6 bytes would take more space than using Unix time which would give both time and date in four bytes. Y2K38 is the real problem. Y2K was a problem with software written by poor devs that were trying to save disk space by actually using more disk space than needed.

This comes to mind:

You don’t store dates as Unix time. Unix timestamps indicate a specific point in time. Dates are not a specific point in time.

You also don’t store dates in a string that you’ll have to parse later. I’ve had to deal with MM-DD-YYYY vs. DD-MM-YYYY problems more times than I can count.

And you understand that you could have a date in unix time and leave the time to be midnight, right? You’d end up with an integer that you could sort without having to parse every goddamn string first.

And for God’s sake if you insist on using strings for dates at the very least go with something like YYYY-MM-DD. Someone else may someday have to deal with your shit code, at the very least make the strings sortable FFS.

You also don’t store dates in a string that you’ll have to parse later

Depends. If the format is clearly defined, then there’s no problem. Or could use a binary format. The point is that you store day/month/year separately, instead of a Unix timestamp.

And you understand that you could have a date in unix time and leave the time to be midnight, right?

No, you can’t.

First of all, midnight in what timezone? A timestamp is a specific instant in time, but dates are not, the specific moment that marks the beginning of a date depends on the timezone.

Say you store the date as midnight in your local timezone. Then your timezone changes, and all your stored dates are incorrect. And before you claim timezones rarely change, they change all the time. Even storing it as the date in UTC can cause problems.

You use timestamps for specific instances in time, but never for storing things that are in local time. Even if you think you are storing a specific instant it, time, you aren’t. Say you make an appointment in your agenda at 14:00 local time, you store this as a Unix timestamp. It’s a specific instant in time, right? No, it’s not. If the time zone changes so, for example, DST goes into effect at a different time, your appointment could suddenly be an hour off, because that appointment was not supposed to be at that instant in time, it was supposed to be at 14:00 in the local timezone, so if the timezone changes the absolute point in time of that appointment changes with it.

You don’t have a line that checks the format and auto converts to your favorite?

You’re probably getting down voted because you asked here instead of a search engine, and many people think it’s common knowledge, and it was already answered in this thread.

Sometimes an innocent question looks like someone JAQing off.

Sounds like a great way to keep people from interacting at all.

Doesn’t seem to be a big problem for much of the thread nor many other threads.

It was a massive threat as it would break banking records and aircraft flight paths. Those industries spent millions to fix the problem. In 14 years(2038) we’ll have a similar problem with all 32bit computers breaking if they haven’t had firmware updates to store UTC time as a 64bit number composed of two 32bit numbers. Lots of medical, industrial, and government equipment will need to either be patched or replaced.

By comparison, there were a few systems that had issues on February 29th because of leap day. Issues with such a routine thing in this current day should be unthinkable.

There wasn’t much of a real “threat”, in that planes wouldn’t fall out of the sky. but banking systems would probably get quite confused, and potentially lead to people being unable to access money easily until it got fixed.

You insinuate that these people might be gullible dopes who swallow whatever it’s popular to swallow, no brains involved.

We have a zero tolerance policy for that attitude.

I wonder how many people will see this and not know its a quote from Futurama

The sysadmin curse (and why you document your actions in a ticketing system).

I literally had this exact exchange with someone last year, when they tried to cast doubt on global warming by comparing it to the ozone. Another person did the same , using acid rain, and I pointed out that the northeast sued the shit out of the Midwest until they cut that shit with the coal fire power plants.

The Conservative Party led Canadian Government and the Regan-era Republican US Government started working on the US-Canada Air Quality Agreement, which was signed by the George H.W. Bush administration into law in the US (and the Brian Mulroney led Government of Canada).

That’s right — two Conservative governments identified a problem, listened to their scientists, and enacted a solution to acid rain. And now the problem has virtually disappeared.

Oh how low Conservatives have fallen on both sides of the border since those days.

I use talking points like these a fair amount with Republicans. Try to get them to think back to when they were leaders in environmental policy. Get back to their roots of environmental stewardship. It seems to have moved the needle slightly.

Many, unfortunately, are so deep in doublethink the won’t believe that tricky Dick initiated the whole thing with the EPA, clean water or air act, and the endangered species acts. Some came after, i think, but he set it rolling. He was still a bad dude, but he did some good stuff

There were goddamn Nickelodeon phone-a-thons where you pledged to not use cfc products. This shit was serious.

Edit: I just remembered ,they talked about how bad the sun was for kids in Australia, or something.

Australia and New Zealand do not fuck about with sun safety. Even with the improvements in the ozone layer, our skin cancer rates are still way higher than the rest of the world

New Zealand do not fuck about with sun safety.

Except we were kicking the can with sun screen regulation until 2022.

https://comcom.govt.nz/business/your-obligations-as-a-business/product-safety-standards/sunscreen

https://www.legislation.govt.nz/act/public/2022/0004/latest/whole.html

Until this law, sun screen lotion didn’t have to prove that they actually provided the SPF that they claimed.

Yeah I lived in Auckland for a bit, they don’t care as much about sunscreen. More sun safety conscious than Pacific Northwesterners in my experience, but probably closer to that group than myself as a fair-skinned Aussie that’s used to getting burnt after just sitting outside in the shade for awhile

I’d argue that while we are much more diligent than other countries, and regulations are much stronger. The average person doesn’t pay nearly enough attention, and the fact the UV index isn’t required to be mentioned on weather reports, or as prominently or more prominently than the temperature, is a big oversight in my opinion.

I check the UV every time I go outside (other than when it’s died down over winter), just as you’d check the temperature, and I think it’s wild barely anyone else does.

The sun is still awful here, the ozone hole is still a thing.

But thanks world, at least I can go out for a solid 4.5 months of the year without worrying about the sun at all, and 6 of only needing to be somewhat careful. Not too shabby :)

Ah but the Ozone hole is increasing again in no small thanks to China!

https://www.abc.net.au/news/science/2019-05-23/mystery-ozone-depleting-gas-tracked-to-china/11137546

I don’t think it’s only thanks to China. I think it is thanks to the whole world, a huge chunk of big companies’ manufacturing is outsourced to China.

No.

The rest of the world’s doing a great job at following through on CFC bans.

This is entirely on China and China alone. No one is forcing their factories to cut corners and use them. Just the same as plastic rice, gutter oil, poison baby formula, etc.

Wait wait wait. Plastic rice?!

deleted by creator

I think the argument to be made is that if China is cutting these corners the rest of the world shouldn’t do business with them. By choosing to use a factory that is using CFCs you are increasing demand for them.

I see, thanks

Imagine if we did this with climate change. Imagine if we tried to switch to renewable energy en masse 20 years ago.

Problem with that is that in comparison the alternative to CFC was not that more expensive and then a cheaper one was invented shortly after.

For climate change you basically can double our energy costs and therefore double the cost of almost everything.

Not to seem callous, but the first world could learn to live off of a little less.

Honestly this is what I keep saying and everyone gets pissed when I do.

There’s enough resources on this planet that every living human could live a decently luxurious life. But because we allow a small handful of us to hoard all those resources we have poverty on a global scale.

Whoa there buddy. That would put my butler’s butler out of a job. Also where does a person park their yacht if not inside another, larger yacht?

It wouldn’t even be less. We’d just have to reign in the capitalist feeding frenzy a bit.

2000 brands of shoes? Advertising? 99% of our production is wasted.

But that isn’t true anymore, right? Renewables are now way cheaper per produced Watt. And still, we’re stuck with people pretending that’s not true.

It’s not so simple. They’re cheaper than building non renewable, but are they cheaper than keeping the current plants running? Also, energy consumption keeps growing, and in many places, new generating plants using renewables usually only take care of the growth, and doesn’t allow for room to take older plants out of operations. If we don’t make huge efforts to reduce our energy consumption, I doubt we’re going to get rid of non renewables so soon…

Currently they are cheaper despite the financial support for fossils but back then it was not and not enough was spent on research

The cost of everything would double?

… Oh no…

like as if we wanted to live

This has since been determined to have tack on benefits in the fight against the climate crisis as well, it’s halved the potential growth in global average temperatures by 2100, which cannot be overstated in just how fantastic that is.

We went from everyone being baked alive and having 20 kinds of skin cancer to boot to merely dealing with catastrophic climate change and society changing people migrations the likes of which haven’t been documented since the successive eras of steppe invasions into Europe, China, India, and the Middle East.

Out of the fire and into the frying pan.

I might just be drunk, but that was a very poetic turn of phrase.

#transcription

Matt Walsh

@MattWalshBlogRemember when they spent years telling us to panic over the hole in the ozone layer and then suddenly just stopped talking about it and nobody ever mentioned the ozone layer

Derek Thompson

@DKThompWhat happened is scientists discovered chlorofluorocarbons were bad for the ozone, countries believed them, the Montreal Protocol was signed, and CFC use fell by 99.7%,l eading to the stabilization of the ozone layer, perhaps the greatest example of global cooperation in history.

I can read it fine thanks

I’m not sure what your intent was, but you’re coming off as “I don’t want online spaces to be welcoming to people who are visually impaired.”

Like me 🤣😎

If there’s ever a specific post you want transcribed feel free to reach out and I can try my best.

Do you go around carving stairs into ramps, too?

I have never heard this phrase before but it is absolutely brilliant.

Removed by mod

Believe it or not I don’t do it for you

Most programs, such as search features, can’t.

And didn’t they find a bunch of Chinese factories pumping them out again not long ago?

Imagine that… Believing what scientists say? Who does that?

Grinds teeth and silently screams inside his head

Same same 😔

Just to be clear, are we sure that the ozone holes are still shrinking?

As far as im aware the hole in the ozone layer is basically gone

Actually there are signs it’s been growing again. Because we forget history so quick.

Sorry I left my ozone vacuum running overnight.

I never realised how much MegaMaid reminds me of Unreal Tournament.

I see articles up to 2022 talking about it shrinking, healing on the predicted timeframe. 2023 is a huge outlier, possibly caused by a volcano, but there’s variability every year. That doesn’t mean it’s growing again

I need to look into it again, but they had found favtories in china emiting a ton of it.

Really? Damn, didn’t know that

The ozone hole size is influenced by the strength of the polar vortex, the Antarctic temperature, and other things in addition to the concentration of CFC molecules. It’s barely shrunk, but CFCs are so long-lived that was expected - the critical point is it stopped growing over 20 years ago. I believe they expect to start seeing shrinking within the next decade.

Looks like it had been expected to heal by 2040, but might also be affected by by climate change - reminder that even when we fix climate change, CO2 stays in the atmosphere over a century. We can only stop making things worse, but it’s your great grand children who stand to really benefit

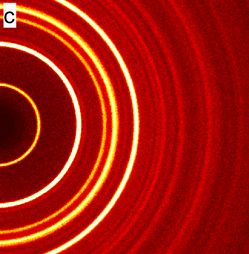

I was thinking of this paper from 2018:

ACP - Evidence for a continuous decline in lower stratospheric ozone offsetting ozone layer recovery

Abstract. Ozone forms in the Earth’s atmosphere from the photodissociation of molecular oxygen, primarily in the tropical stratosphere. It is then transported to the extratropics by the Brewer–Dobson circulation (BDC), forming a protective ozone layer around the globe. Human emissions of halogen-containing ozone-depleting substances (hODSs) led to a decline in stratospheric ozone until they were banned by the Montreal Protocol, and since 1998 ozone in the upper stratosphere is rising again, likely the recovery from halogen-induced losses. Total column measurements of ozone between the Earth’s surface and the top of the atmosphere indicate that the ozone layer has stopped declining across the globe, but no clear increase has been observed at latitudes between 60° S and 60° N outside the polar regions (60–90°). Here we report evidence from multiple satellite measurements that ozone in the lower stratosphere between 60° S and 60° N has indeed continued to decline since 1998. We find that, even though upper stratospheric ozone is recovering, the continuing downward trend in the lower stratosphere prevails, resulting in a downward trend in stratospheric column ozone between 60° S and 60° N. We find that total column ozone between 60° S and 60° N appears not to have decreased only because of increases in tropospheric column ozone that compensate for the stratospheric decreases. The reasons for the continued reduction of lower stratospheric ozone are not clear; models do not reproduce these trends, and thus the causes now urgently need to be established.

and this paper from 2023:

Potential drivers of the recent large Antarctic ozone holes | Nature Communications

The past three years (2020–2022) have witnessed the re-emergence of large, long-lived ozone holes over Antarctica. Understanding ozone variability remains of high importance due to the major role Antarctic stratospheric ozone plays in climate variability across the Southern Hemisphere. Climate change has already incited new sources of ozone depletion, and the atmospheric abundance of several chlorofluorocarbons has recently been on the rise. In this work, we take a comprehensive look at the monthly and daily ozone changes at different altitudes and latitudes within the Antarctic ozone hole. Following indications of early-spring recovery, the October middle stratosphere is dominated by continued, significant ozone reduction since 2004, amounting to 26% loss in the core of the ozone hole. We link the declines in mid-spring Antarctic ozone to dynamical changes in mesospheric descent within the polar vortex, highlighting the importance of continued monitoring of the state of the ozone layer.

Unfortunately there can still be emissions:

https://www.nature.com/articles/s41586-019-1193-4

From abstract:

A recently reported slowdown in the decline of the atmospheric concentration of CFC-11 after 2012, however, suggests that global emissions have increased3,4. A concurrent increase in CFC-11 emissions from eastern Asia contributes to the global emission increase, but the location and magnitude of this regional source are unknown3. Here, using high-frequency atmospheric observations from Gosan, South Korea, and Hateruma, Japan, together with global monitoring data and atmospheric chemical transport model simulations, we investigate regional CFC-11 emissions from eastern Asia. We show that emissions from eastern mainland China are 7.0 ± 3.0 (±1 standard deviation) gigagrams per year higher in 2014–2017 than in 2008–2012, and that the increase in emissions arises primarily around the northeastern provinces of Shandong and Hebei.

Like he even read the response

The problem is not if he reads the response, it’s that the followers won’t or if they do, will just fight it.

TBH “The whole world agreed on something” narrative doesn’t really reflect what happened.

Actually, The Industry dropped using CFC after a cheaper and luckily safer alternative has been discovered right around that time.

The fact is, most companies are fine to let an existing system run rather than replace it with one that has a cheaper consumable thing, provided they can still get that consumable and the cost of replacing that system is high.

Basically, corps would have kept buying and using CFCs because replacing the refrigeration system is too costly.

Not only was an alternative found that was cheaper and safer and almost as good (as effective), but scientists and engineers put in the effort to find ways to adapt existing systems to the new working fluid. All for significantly less than replacing the system.

Not only was a replacement found, but it was made economically viable for widespread deployment in a very short timeframe; not just having a short development time, but also a very short duration to deploy the new solution to an existing system.

You’re right, that it was cheaper and everything, but most of the time changing the working fluid of a refrigerator/air conditioning unit, will require that the system is replaced. They worked around that. Additionally, you’re correct that it was industry that made the change and pushed it to their clients.

I just want to make sure we recognise the efforts put in by the scientists and engineers that enabled the rapid switch to non-CFC based cooling systems. It’s still an amazing achievement IMO, and something that required a remarkable amount of cooperation by people who probably don’t cooperate often or at all (and are, in all likelihood, fairly hostile to eachother, most of the time).

IMO, that’s still one of the best examples of global cooperation that anyone could possibly point to. Rarely do we have a problem where there’s almost universal consensus on the issue and how to fix it. In this case, there was. That level of cooperation among the people of earth is borderline unparalleled; the only other times we cooperated this well that people would know about are usually negotiations done with the barrel of a gun. Namely the world wars. One group said that we’re going to do a thing, another group said nope. It was settled with lives, bullets and bombs, and nearly every person alive was on one side or the other… Except Sweden, I suppose… And maybe smaller countries that didn’t have enough of an army to participate. (I’m sure there’s dozens of reasons, but I’m not a historian)

Without guns, bombs, or even threats, just a presentation of the facts and a proposal for a solution, everyone just … went along with it.

To me, that’s unprecedented.

There was a necessary round of nearly all governments on Earth agreeing to fine and extinguish business or even throwing executives on jail if they insisted on using the more expensive alternative.

Only after that people stopped using CFCs.

Honestly, some times I wonder if we live in an episode of Captain Planet. Some people look like plain childish cartoon villains.

Conservatives aren’t used to the concept of “Problems go away when you do something about them.”

They are stuck in the mindset of “The problem will always be with us, so just shame those suffering from it and isolate them so we don’t catch their problem.”

To be honest, this is not just conservatives.

Naw, this is literally the conservative mind set. Even if someone doesn’t vote for republicans, thinking like this is conservative thinking.

This is also the liberal mind set.

No it isnt.

So what are your views on liberals that support Biden regardless of his funding of genocide in Palestine? To me it seems exactly like this mind set of “we can’t fix this, lets just not let their problem spill over to us”.

My view is that I would prefer someone younger and with similar ideals as Bernie but in reality, we have a choice between Biden and a man that given the chance, would end liberal’s right to vote forever.

I feel like people that bitch and complain about Biden do not at all understand the danger we are all in if Trump wins because the vote is split. Republicans do not have a conscience. They are more than happy to band together despite their disagreements if it means that they win. I just wonder why in the fuck anyone would risk that happening again given the decades of harm Trump caused in one term.

So am I happy about voting for Biden? No not really. It bothers the fuck out of me that Israel has the support it does. But the reality is that Trump and the modern republican party are about [] that close to reinacting the night of broken glass and Id rather that not happen.

I don’t think you understand the point of view people critisizing Biden. Democrats are the ones that put Trump in power, just so they can have an easier elections and don’t have to place more popular candidates to run against them. Voting for Biden is simply accepting defeat, that their plan worked and that they can do absolutely anything and you will support them because they will also support a worse candidate on the other side at the same time. It is not looking at the big picture, long term. In the future they can get someone like Trump to be a Democrat candidate and support someone even worse on the Republican side and you will have to vote for them under exactly the same situation. Democrats have a candidate that literarlly funds a genocide and we would think that once that line is crossed people would simply say that is enough, but apparently even Hitler would be elected in US elections as long as he places someone worse as prime candidate of another party.