The best part of the fediverse is that anyone can run their own server. The downside of this is that anyone can easily create hordes of fake accounts, as I will now demonstrate.

Fighting fake accounts is hard and most implementations do not currently have an effective way of filtering out fake accounts. I’m sure that the developers will step in if this becomes a bigger problem. Until then, remember that votes are just a number.

This was a problem on reddit too. Anyone could create accounts - heck, I had 8 accounts:

one main, one alt, one “professional” (linked publicly on my website), and five for my bots (whose accounts were optimistically created, but were never properly run). I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

There needs to be a better way to solve this, but I’m unsure if we truly can solve this. Botnets are a problem across all social media (my undergrad thesis many years ago was detecting botnets on Reddit using Graph Neural Networks).

Fwiw, I have only one Lemmy account.

I see what you mean, but there’s also a large number of lurkers, who will only vote but never comment.

I don’t think it’s unfeasible to have a small number of comments on a highly upvoted post.

If it’s a meme or shitpost there isn’t anything to talk about

Maybe you’re right, but it just felt uncanny to see thousands of upvotes on a post with only a handful of comments. Maybe someone who active on the bot-detection subreddits can pitch in.

I agree completely. 3k upvotes on the front page with 12 comments just screams vote manipulation

True, but there were also a number of subs (thinking of the various meirl spin-offs, for example) that naturally had limited engagement compared to other subs. It wasn’t uncommon to see a post with like 2K upvotes and five comments, all of them remarking how little comments there actually were.

Reddit had ways to automatically catch people trying to manipulate votes though, at least the obvious ones. A friend of mine posted a reddit link for everyone to upvote on our group and got temporarily suspended for vote manipulation like an hour later. I don’t know if something like that can be implemented in the Fediverse but some people on github suggested a way for instances to share to other instances how trusted/distrusted a user or instance is.

An automated trust rating will be critical for Lemmy, longer term. It’s the same arms race as email has to fight. There should be a linked trust system of both instances and users. The instance ‘vouches’ for the users trust score. However, if other instances collectively disagree, then the trust score of the instance is also hit. Other instances can then use this information to judge how much to allow from users in that instance.

LLM bots has make this approach much less effective though. I can just leave my bots for a few months or a year to get reputation, automate them in a way that they are completely indistinguishable from a natural looking 200 users, making my opinion carry 200x the weight. Mostly for free. A person with money could do so much more.

It’s the same game as email. An arms race between spam detection, and spam detector evasion. The goal isn’t to get all the bots with it, but to clear out the low hanging fruit.

In your case, if another server noticed a large number of accounts working in lockstep, then it’s fairly obvious bot-like behaviour. If their home server also noticed the pattern and reports it (lowers the users trust rating) then it wont be dinged harshly. If it reports all is fine, then it’s also assumed the instance might be involved.

If you control the instance, then you can make it lie, but this downgrades the instance’s score. If it’s someone else’s, then there is incentive not to become a bot farm, or at least be honest in how it reports to the rest.

This is basically what happens with email. It’s FAR from perfect, but a lot better than nothing. I believe 99+% of all emails sent are spam. Almost all get blocked. The spammers have to work to get them through.

This will be very difficult. With Lemmy being open source (which is good), bot maker’s can just avoid the pitfalls they see in the system (which is bad).

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

Nah it’s the same here in Lemmy. It’s because the algorithm only accounts for votes and not for user engagement.

Yeah votes are the worst metric to measure anything because of bot voters.

I’m curious what value you get from a bot? Were you using it to upvote your posts, or to crawl for things that you found interesting?

The latter. I was making bots to collect data (for the previously-mentioned thesis) and to make some form of utility bots whenever I had ideas.

I once had an idea to make a community-driven tagging bot to tag images (like hashtags). This would have been useful for graph building and just general information-lookup. Sadly, the idea never came to fruition.

Cool, thank you for clarifying!

Congratulations on such a tough project.

And yes, as long as the API is accessible somebody will create bots. The alternative is far worse though

Yes, I feel like this is a moot point. If you want it to be “one human, one vote” then you need to use some form of government login (like id.me, which I’ve never gotten to work). Otherwise people will make alts and inflate/deflate the “real” count. I’m less concerned about “accurate points” and more concerned about stability, participation, and making this platform as inclusive as possible.

In my opinion, the biggest (and quite possibly most dangerous) problem is someone artificially pumping up their ideas. To all the users who sort by active / hot, this would be quite problematic.

I’d love to actually see some social media research groups actually consider how to detect and potentially eliminate this issue on Lemmy, considering Lemmy is quite new and is malleable at this point (compared to other social media). For example, if they think metric X may be a good idea to include in all metadata to increase chances of detection, then it may be possible to include this in the source code of posts / comments / activities.

I know a few professors and researchers who do research on social media and associated technologies, I’ll go talk to them when they come to their office on Monday.

!remindme - oh wait…

@[email protected] 1 day

:)

@Lumidaub Ok, I will remind you on Monday Jul 10, 2023 at 9:36 AM PDT.

I have like tens of accounts on reddit.

I always had 3 or 4 reddit accounts in use at once. One for commenting, one for porn, one for discussing drugs and one for pics that could be linked back to me (of my car for example) I also made a new commenting account like once a year so that if someone recognized me they wouldn’t be able to find every comment I’ve ever written.

On lemmy I have just two now (other is for porn) but I’m probably going to make one or two more at some point

I have about 20 reddit accounts… I created/ switched account every few months when I used reddit

On Reddit there were literally bot armies by which thousands of votes could be instantly implemented. It will become a problem if votes have any actual effect.

It’s fine if they’re only there as an indicator, but if the votes are what determine popularity, prioritize visibility, it will become a total shitshow at some point. And it will be rapid. So yeah, better to have a defense system in place asap.

If you and several other accounts all upvoted each other from the same IP address, you’ll get a warning from reddit. If my wife ever found any of my comments in the wild, she would upvoted them. The third time she did it, we both got a warning about manipulating votes. They threatened to ban both of our accounts if we did it again.

But here, no one is going to check that.

I don’t know how you got away with that to be honest. Reddit has fairly good protection from that behaviour. If you up vote something from the same IP with different accounts reasonably close together there’s a warning. Do it again there’s a ban.

I did it two or three times with 3-5 accounts (never all 8). I also used to ask my friends (N=~8) to upvote stuff too (yes, I was pathetic) and I wasn’t warned/banned. This was five-six years ago.

I think the best solution there is so far is to require captcha for every upvote but that’d lead to poor user experience. I guess it’s the cost benefit of user experience degrading through fake upvotes vs through requiring captcha.

If any instance ever requires a captcha for something as trivial as an upvote, I’ll simply stop upvoting on that instance.

I’d just make new usernames whenever I thought of one I thought was funny. I’ve only used this one on Lemmy (so far) but eventually I’ll probably make a new one when I have one of those “Oh shit, that’d be a good username” moments.

You can change your display name on Lemmy to whatever you want whenever you want.

Votes were just a number on reddit too… There was no magic behind them, and as Spez showed us multiple times: even reddit modified counts to make some posts tell something different.

And remember: reddit used to have a horde of bots just to become popular.

Everything on the internet is or can be fake!

Everyone forgot how he and his wife announced their marriage in a subreddit nobody knew about that suddenly rise up to the first place on r/all.

reddit used to have a horde of bots just to become popular

Since the launch back in 2005.

[This comment has been deleted by an automated system]

I’ve set the registration date on my account back 100 years just to show how easy it is to manipulate Lemmy when you run your own server.

That’s exactly what a vampire that was here 100 years ago would say.

This man is over 100 years old

The only real early adopter

Even older than the project itself. Nice… The alfa… The first, the only one

Happy centennial cake day

If it becomes too big of a problem, instances will whitelist the most popular instances instead of trying to blacklist all the bad ones.

How would that work? How will new instances/servers ever get a chance to grow if the fediverse only allowed those who are already whitelisted? Sorry for my limited knowledge about fediverse but it sounds like that goes directly against the base principle of a federated space?

It goes against the base principle, but, at the same time, is something quite possible to happen if things get out of control. Decentralization is complex, and brings several challenges for everyone to face.

shocked shocked

💀😂

There’s a reason everyone comes to my saloon…

Did anyone ever claim that the Fediverse is somehow a solution for the bot/fake vote or even brigading problem?

I think the point is that the Fediverse is severely limited by this vulnerability. It’s not supposed to solve that specific problem, but that problem might need to be addressed if we want the Fediverse to be able to do what we want it to do (put the power back in the hands of the users)

I see your point… votes from a compromised instance (or instances) and such. How is this more or less vulnerable to a centralized model?

I’m not a security expert by any means, though I would imagine this type of attack can more be more easily made harder to execute if all accounts have to go through one server first. Lemmy seems to be as strong as the weakest link in this regard, but a centralized model is just a single link. I imagine that any effective strategy that works for Lemmy is much easier on a centralized platforms, even though the reverse statement isn’t true.

That said, I’m optimistic that this gets figured out. Centralized platforms have had decades to solve this problem and we’re just getting started.

I would imagine this is the same with bans I imagine there will be a future reputation watchdog set of servers which might be used over this whole everyone follows the same modlog. The concept of trust everyone out of the gate seems a little naive

I don’t have experience with systems like this, but just as sort of a fusion of a lot of ideas I’ve read in this thread, could some sort of per-instance trust system work?

The more any instance interacts positively (posting, commenting, etc.) with main instance ‘A,’ that particular instance’s reputation score gets bumped up on main instance A. Then, use that score with the ratio of votes from that instance to the total amount of votes in some function in order to determine the value of each vote cast.

This probably isn’t coherent, but I just woke up, and I also have no idea what I’m talking about.

Something like that already happened on Mastodon! Admins got together and marked instances as “bad”. They made a list. And after a few months, everything went back to normal. This kind of self organization is normal on the fediverse.

I believe db0 is working on something like this to help combat the eventual waves of spam and stuff were going to see.

You mean to tell me that copying the exact same system that Reddit was using and couldn’t keep bots out of is still vuln to bots? Wild

Until we find a smarter way or at least a different way to rank/filter content, we’re going to be stuck in this same boat.

Who’s to say I don’t create a community of real people who are devoted to manipulating votes? What’s the difference?

The issue at hand is the post ranking system/karma itself. But we’re prolly gonna be focusing on infosec going forward given what just happened

What did I miss?

Last night a hacker(s) used an exploit to manipulate the content on multiple instances including lemmy.world.

deleted by creator

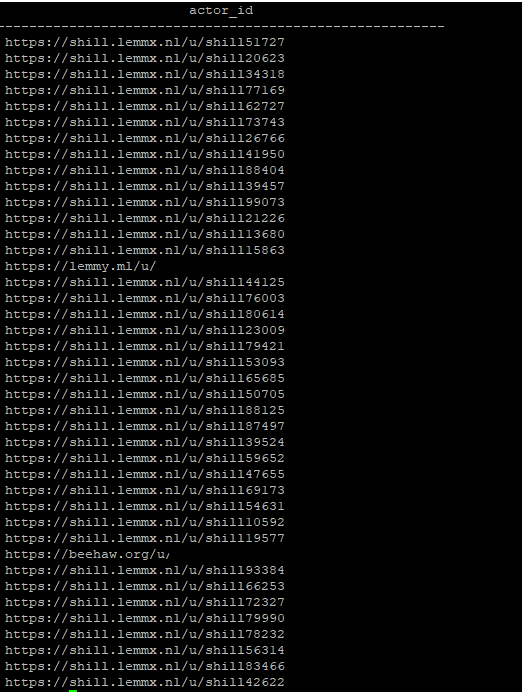

In case anyone’s wondering this is what we instance admins can see in the database. In this case it’s an obvious example, but this can be used to detect patterns of vote manipulation.

“Shill” is a rather on-the-nose choice for a name to iterate with haha

I appreciate it, good for demonstration and just tickles my funny bone for some reason. I will be delighted if this user gets to 100,000 upvotes—one for every possible iteration of shill#####.

Oh cool 👀 What’s the rest of that table? Is the

actor_idone column in like… anupvotestable or something?actor_id is just the full url of an user. It has the username at the end. That’s why I have censored it.

Honestly, thank you for demonstrating a clear limitation of how things currently work. Lemmy (and Kbin) probably should look into internal rate limiting on posts to avoid this.

I’m a bit naive on the subject, but perhaps there’s a way to detect “over x amount of votes from over x amount of users from this instance”? and basically invalidate them?

How do you differentiate between a small instance where 10 votes would already be suspicious vs a large instance such as lemmy.world, where 10 would be normal?

I don’t think instances publish how many users they have and it’s not reliable anyway, since you can easily fudge those numbers.

10 votes within a minute of each other is probably normal. 10 votes all at once, or microseconds of each other, is statistically less likely to happen.

I won’t pretend to be an expert on the subject, but it seems like it’s mathematically possible to set some kind of threshold? If a set percent of users from an instance are all interacting microseconds from each other on one post locally, that ought to trigger a flag.

Not all instances advertise their user counts accurately, but they’re nevertheless reflected through a NodeInfo endpoint.

Surely the bot server can just set up a random delay between upvotes to circumvent that sort of detection

This is something that will be hard to solve. You can’t really effectively discern between a large instance with a lot of users, and instance with lot of fake users that’s making them look like real users. Any kind of protection I can think of, for example based on the activity of the users, can be simply faked by the bot server.

The only solution I see is to just publish the vote% or vote counts per instance, since that’s what the local server knows, and let us personally ban instances we don’t recognize or care about, so their votes won’t count in our feed.

that would be the best way to do it, i guess if you go further you could let users filter which instances they would like to “count” and even have whole filter lists made by the community.

I think it would actually be pretty easy to detect because the bots would vote very similarly to each other (otherwise what’s the point), which means it would look very different from the distribution of votes coming from an organic user base

I like that idea. A twist on it would be to divide the votes on a post by the total vote count or user count for that instance, so each instance has the same proportional say as any other. e.g. if a server with 1000 people gives 1000 upvotes, those count the same as a server with 10 people giving 10 votes.

Wouldn’t that make it actually a lot worse? As in, if I just make my own instance with one user total, I’ll just singlehandedly outvote every other server.

Congratulations! You’ve just reinvented the electoral college. And that works so brilliantly… /s

Web of trust is the solution. Show me vote totals that only count people I trust, 90% of people they trust, 81% of people they trust, etc. (0.9 multiplier should be configurable if possible!)

deleted by creator

Fwiw, search engines need to figure out what is “reliable”. The original implementations were, well if BananaPie.com is referenced by 10% of the web, it must be super trustworthy! So people created huge networks of websites that all linked each other and a website they wanted to promote in order to gain reliability.

deleted by creator

It could be implemented on both the server and the client, with the client trusting the server most of the time and spot checking occasionally to keep the server honest.

The origins of upvotes and downvotes are already revealed on objects on Lemmy and most other fediverse platforms. However, this is not an absolute requirement; there are cryptographic solutions that allow verifying vote aggregation without identifying vote origins, but they are mathematically expensive.

deleted by creator

Any solution that only works because the platform is small and that doesn’t scale is a bad solution though.

It’s nothing. You don’t recompute everything for each page refresh. Your sucks well the data, compute reputation total over time and discard old raw data when your local cache is full.

Historical daily data gets packaged, compressed, and cross signed by multiple high reputation entities.

When there are doubts about a user’s history, your client drills down those historical packages and reconstitute their history to recalculate their reputation

Whenever a client does that work, they publish the result and sign it with their private keys and that becomes a web of trust data point for the entire network.

Only clients and the network matter, servers are just untrustworthy temporary caches.

That sounds a bit hyperbolic.

You can externalize the web of trust with a decentralized system, and then just link it to accounts at whatever service you’re using. You could use a browser extension, for example, that shows you whether you trust a commenter or poster.

That list wouldn’t get federated out, it could live in its own ecosystem, and update your local instance so it provides a separate list of votes for people in your web of trust. So only your admin (which could be you!) would know who you trust, and it would send two sets of vote totals to your client (or maybe three if you wanted to know how many votes it got from your instance alone).

So no, I don’t think it needs to be invasive at all.

What if the web of trust is calculated with upvotes and downvotes? We already trust server admins to store those.

I think that could work well. At the very least, I want the feature where I can see how many times I’ve upvoted/down voted a given individual when they post.

That wouldn’t/shouldn’t give you transitive data imo, because voting for something doesn’t mean you trust them, just that the content is valuable (e.g. it could be a useful bot).

I think people often forget federation is not a new thing, it’s a first design for internet communication services. Email, which is predating the Internet, is also federated network and most popular widely adopted of them all modes of Internet communication. It also had spam issues and there where many solutions for that case.

The one I liked the most was hashcash, since it requires not trust. It’s the first proof-of-work system and it was an inspiration to blockchains.

Now days email spam filter especially proprietary from Google or Verizon yahoo really make indie mail server harder to maintain and always got labeled as spam even with DKIM, dmarc, right spf, and clean reputable public IP

I don’t know what the answer is, but I hope it is something more environmentally friendly than burning cash on electricity. I wonder if there could be some way to prove time spent but not CPU.

expired

[This comment has been deleted by an automated system]

deleted by creator

This could become a problem on posts only relevant on one server

Obviously, on the server the posts are from, you display the full vote count. There, the admins know the accounts, can vet them, etc.

This would be rather to detect and alert admin of a bad actors (instances) and then admin can kick it off from federation same for other tupe of offences.

Small instances are cheap, so we need a way to prevent 100 bot instances running on the same server from gaming this too

expired