- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

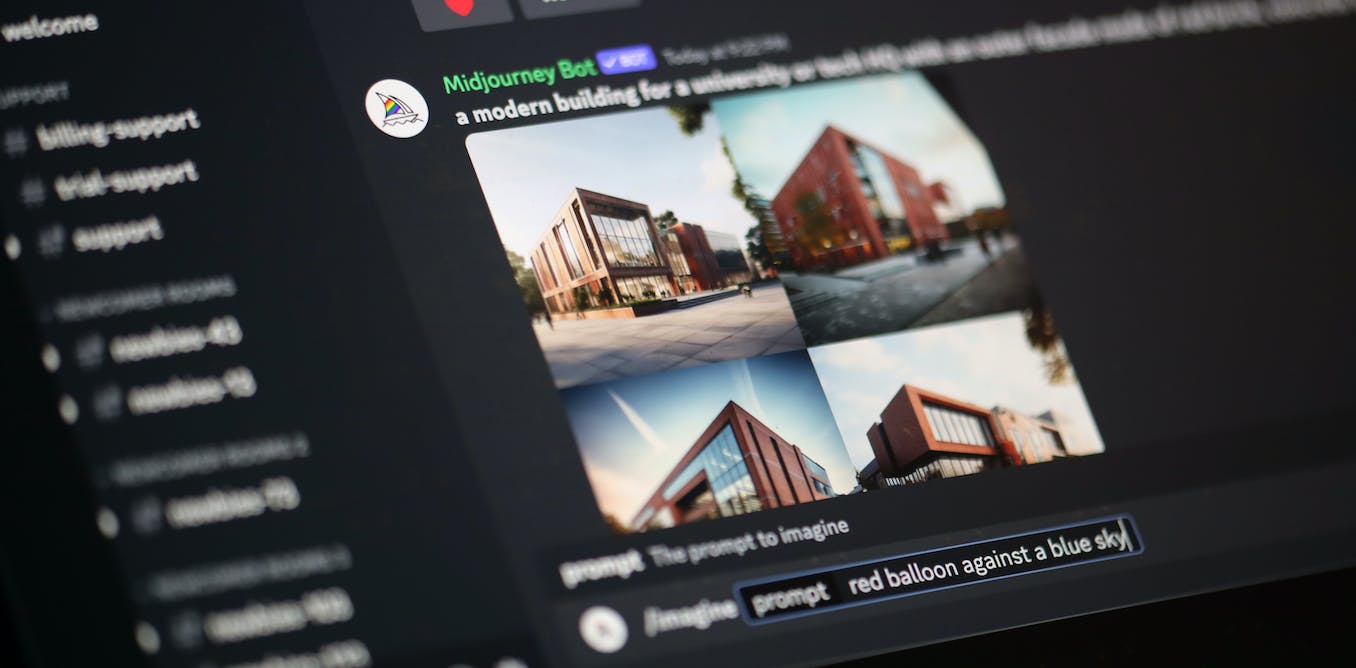

Data poisoning: how artists are sabotaging AI to take revenge on image generators::As AI developers indiscriminately suck up online content to train their models, artists are seeking ways to fight back.

This system runs on the assumption that A) massive generalized scraping is still required B) You maintain the metadata of the original image C) No transformation has occurred to the poisoned picture prior to training(Stable diffusion is 512x512). Nowhere in the linked paper did they say they had conditioned the poisoned data to conform to the data set. This appears to be a case of fighting the last war.

It is likely a typo, but “last AI war” sounds ominous 😅